Why Yōzefu?

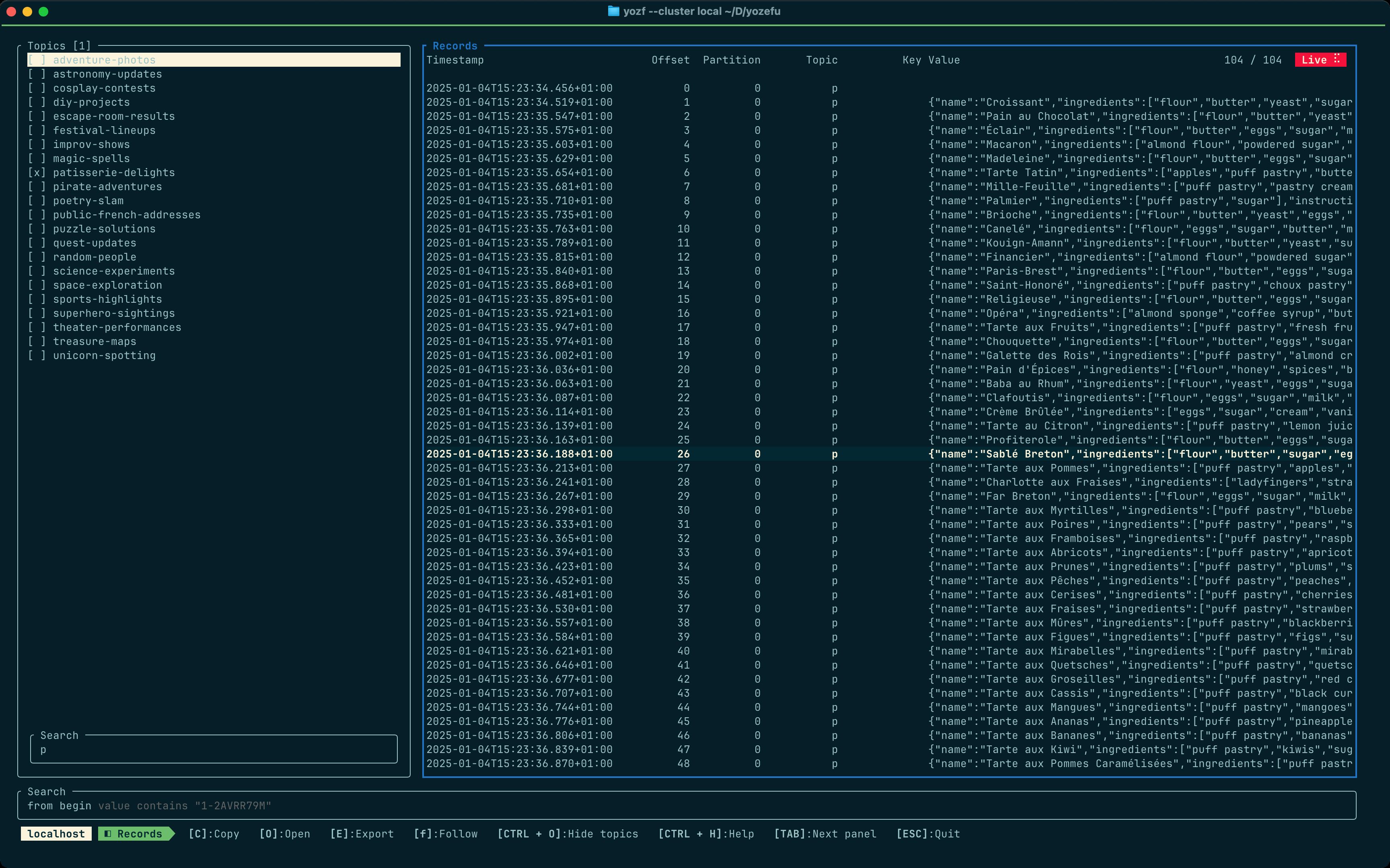

Over the last 4 months, I've been working on Yōzefu, a terminal user interface to search for data in a Kafka cluster. In this article, I'll explain why I have chosen the terminal as a user interface and how I built Yōzefu. Opinions are my own and not the views of my employer.

An interactive terminal user interface (TUI) application for exploring data of a kafka cluster.

If you are familiar with Apache Kafka, feel free to move on to the next section.

Apache Kafka is a distributed event store platform written in Java. Software can connect to a Kafka cluster and read/write what we call records. A record is a data envelope composed of different attributes:

The key, an optional array of bytes,

The value, an optional array of bytes,

A set of headers,

The timestamp of the record in milliseconds since epoch.

To make records readable for computers, we usually specify a format for the key and the value. For example, we can decide the key will be a version 4 UUID and the value a json payload.

key → 15AE6B8A-C63A-4E5A-B306-C32F88167CD6

value → [{"item": "Fajitas", "quantity": 2, "pickup": true}]

timestamp → 1736097744249

headers → parent-trace : 75210a19-cff8-4f1a-b3f8-0a375eb3cb96

environment : development

position : 46.1541399,-1.1716597

Records are deposited to a letter box called a topic. A topic is identified by a unique name. Usually, a topic contains a specific type of record. For instance, if you work in a restaurant, you can define 2 topics:

my-restaurant.orderscontaining records related to customer orders.my-restaurant.ingredients.stockswith the remaining stocks for ingredients.

There are 2 types of actors in Apache Kafka:

The producer that publishes a record to a topic.

The consumer that consumes records from 1 or multiple topics.

Producers and consumers can be written with different programming languages.

Unlike message queue systems (like RabbitMQ), a published record stays in the topic for a certain period of time. In another words, consuming a record doesn't remove it from the topic.

If you want to delete a record, either you wait for the record to be expired or you empty the topics and therefore all the records.

That's it for Apache Kafka! Now you have enough information to understand the following parts. If you want to learn more about this technology, I recommend you the Confluent youtube playlist.

Today is Monday! I hate it because I'm the incident response team member that day. My ex team (salut 👋) is in charge of synchronizing data coming from different sources to an Elasticsearch cluster. We develop and maintain applications that transform and aggregate data. One of our constraints is that the pipeline must propagate fresh data to Elasticsearch as fast as possible, under 1 second.

My team decided to develop an Extract Transform Load (ETL) data pipeline. This pipeline relies a lot on Apache Kafka and Java:

There are 3 kafka topics, one for each step of the ETL process:

extractwhere the data is extracted from the source,transformwhere the data is transformed and enriched,loadwhere the data is loaded to Elasticsearch.

Today, we've been receiving incidents related to elasticsearch documents that seem to be updated late. Data take more than a second to be propagated to Elasticsearch.

What creates that latency?

Did we process a huge amount of data at that time?

Are our applications suffering?

Is there a temporary outage on Kafka?

Is the network having some trouble?

Are the databases slow to notify us?

Is Elasticsearch under pressure?

As you can imagine, there are so many causes that could explain latency in the pipeline. What will make this Monday (and the next days) even more complicated is that the databases, Elasticsearch, Kafka, the network are not under our responsibility. It means I have a very restricted scope to diagnose the latency.

Before pointing the finger at my colleagues, I thought It would be interesting to measure how long it takes us to synchronise the data, especially for data identified as slow. So I rolled up my sleeves and for every data with latency, I looked through 3 topics and make sure kafka records are published as soon as possible to the next topics.

Observability is one of the solutions to diagnose latency. Unfortunately, our applications are not openTelemetry-ready yet.

To diagnose the latency, I need to read the timestamp of the kafka records. They are plenty of tools to read data going through our kafka cluster. Here are some well known tools :

AKHQ, built with Micronaut and React. This is the tool we use at MAIF.

KafDrop, an open source Spring boot application.

Redpanda console, a web application written in Golang and React.

Conduktor Console, a web application with advanced search features. It requires PostgreSQL.

The kafka plugin for JetBrains IDEs written in Java.

I've been testing all tools trying to find the right shoe on my foot. These tools are great, they work well and the user experience is okay if you use the tool once a day. However, if you use these tools multiple times a day, the user experience decreases strongly. I still use these tools but in some specific usage context, they show some limitations:

You can only search in 1 topic: It means for N topics you want to search in, you need to open N browser tabs, select the topic and run a new search. It's like running a SQL query without being able to join tables. In advanced architectures, data can travel through multiple topics. Being able to search data on multiple topics would be a nice feature to have.

Most tools offer limited search features: contains, not contains, equals. They're usually the only operators you can use to search for kafka records. These are probably enough in most of the use cases but when you need to run precise queries, these operators are too limited and will probably give you results with a lot of noise.

Most tools are web-based applications: I love web applications, but here I am very disappointed in the user experience just because of one thing: the number of mouse interactions. Using the mouse is, in my own opinion, a disaster. If you think of running a SQL query, the only interactions you must execute are opening a console, writing your query and hitting the run key shortcut, simple.

Being a big fan a CLIs since I was 3 years old, I thought it would interesting to explore the possibilities to build an UI in the terminal.

Terminal User Interfaces (TUI) are software designed to run in a terminal. TUIs are everywhere and there is a good chance you use them every day: vim, tmux, htop, k9s, bacon or even midnight commander...

TUIs bring a visual dimension to your CLI software. With a large palette of characters offered by Unicode, a few tablespoons of colors, borders, tables, emojis, loading spinners, it has become possible to draw a nice-looking UI.

TUIs also offer a new way to interact with your software: with CLIs, you run a command, you get an output, you run another command and so on... It's a lot of back and forth interactions. With TUIs, you start a "session" and stick to it as long as you want.

A year ago, I came across this FOSDEM 2024 presentation named Introducing Ratatui: A Rust library to cook up terminal user interfaces. At that time, I was learning about Rust and thought it would be nice to give a try to this library. Ratatui allows you to build terminal user interfaces. It provides basic widgets that you can compose to draw a user interface. Combined with the clap crate, it's getting easier to build a TUI application.

I have a web development background. In the past, I used fronted frameworks such as React or lit-dev. So it felt natural to choose the component architecture pattern. The documentation is well written so it's nice to getting started quickly.

In Ratatui, a component is very similar to a web component in terms of API. It's a piece of code that renders characters in a given area of the terminal. A component can be a button, a text input, a table, a panel, a modal etc. In Yōzefu, every component must implement the Component trait:

pub trait Component {

/// Register an action handler to receive actions from the application

fn register_action_handler(&mut self, tx: UnboundedSender<Action>) -> Result<(), MyError>;

/// This identifier is unique per component

fn id(&self) -> ComponentName;

/// handle key events when a user types on the keyboard

fn handle_key_events(&mut self, key: KeyEvent) -> Result<Option<Action>, MyError>;

/// Update the state of this component with the given action

fn update(&mut self, action: Action) -> Result<Option<Action>, MyError>;

/// Draw the component on the terminal, similar to `render()` in React

fn draw(&mut self, f: &mut Frame<'_>, rect: Rect) -> Result<(), MyError>;

}

A component can be stateless or stateful. Once again, it's very similar to the React API back in the old days...

pub(crate) struct LinkToRepositoryComponent {}

impl Component for LinkToRepositoryComponent {

fn id(&self) -> ComponentName {

ComponentName::Link

}

fn draw(&mut self, frame: &mut Frame<'_>, rect: Rect) -> Result<(), MyError> {

let block = Block::default()

.borders(Borders::ALL)

.border_type(BorderType::Rounded)

.padding(Padding::symmetric(5, 0))

.style(Style::default());

let paragraph = Paragraph::new(vec![

Line::from("GitHub repository:"),

Line::from("https://github.com/MAIF/yozefu").bold(),

]);

frame.render_widget(paragraph.inner(block), rect);

Ok(())

}

}

Once you have defined your components, you can compose them to build a nice UI. Yozefu has multiple components, the main ones are:

TopicsComponent: Lists all the topics available in the Kafka cluster.RecordsComponent: Displays the records of the selected topic.RecordDetailsComponent: Displays details about a particular kafka record (key, value, partition, offset, schema ...).FooterComponent: Displays user notifications and the key shortcuts.helpComponent: Shows the help page.

Components are then grouped in a view. A view is also a component but its goal is to render a set of components together. And finally, we have the UI. The UI is responsible of:

Storing the global state of the application,

Managing the components to render based on user interactions,

Dealing with the focus state and the navigation history,

Subscribing to crossterm events and dispatch them to the visible components.

As I mentioned previously, I would like to improve the search experience because:

The operators are not fined-grained enough: meaning they generate a lot of noise in the results.

It takes time to craft a search query: you need to deal with select boxes, text inputs, date pickers and so on... It's not super convenient.

Sometimes, it's obscure how filters are interpreted: if I specify multiple search criteria, are they combined with an

ANDor anORoperator?

For Yozefu, I decided to develop a query language. It's inspired by SQL and it offers more possibilities to filter kafka records. Here is an example of a query in Yozefu:

from beginning -- From the beginning of partitions

where value.status == "started" -- Assume the value is a json object

or headers.trace-parent == "75210a19-cff8-4f1a-b3f8-0a375eb3cb96" -- Filter on the value of a header

and partition == 1 -- Only records from partition 1

and offset > 345 -- Offset of the record must be greater than 345

limit 10 -- Return the 10 first records only

The parsing was done with the nom crate. It's a parser combinator library that allows you to build parsers by combining small parsers together. I'ts very fun to use! Once the query is parsed, telling whether a kafka record matches the query is straightforward.

In terms of UI, I have created a SearchComponent. The search component is an input field where you can type your query. It highlights the syntax errors if your query is incorrect.

My experience with Ratatatui was great. I had a lot of fun developing Yōzefu. Here are some pros and cons I have identified about the library and more generally about TUIs:

Pros

Ratatui comes with a lot of built-in widgets. As a developer, you just need to compose with them to build enhanced widgets.

The library includes a nice layout system to structure and organize your UI.

I don't know if it's intentional but the API for stylizing components (wording, colors, fonts, borders) is very close to what we can find in the web ecosystem. To me, I had the same feeling as writing CSS code.

With Ratatui, you use Rust for the backend and the frontend.

TUIs are easy to deploy and distribute a TUI, it's just a binary to download.

TUIs are excellent if you work on remote servers or containers.

Building a TUI is a creative process. You are limited with what you can display in the terminal so you need to think of new ways to give feedback to the user. It was a refreshing experience.

Ratatui is a Rust library, so it comes with all the benefits of Rust: memory safety, performance, quality of crates...

The documentation is good and the community active. It was easy to find answers to my questions.

In general, TUIs consume less hardware resources than GUI-based applications or classic web applications.

Cons

There is a a bit of boilerplate code to getting started. It can be frustrating at the beginning. Fortunately, you can use a generator to bootstrap your project.

Rendering an app in the terminal can be tricky. Even though terminals have been popping up in the last few years with advanced features, you will be limited and won't get the same liberty as with web development.

There are few things I had to implement myself: components layers to add depth and a navigation history to implement a breadcrumb. It's not a big deal but it's something to keep in mind.

During the development phase, I faced issues around operating systems-specific keyboard modifiers. Basically, I had to limit myself to CTRL. Do not count on super on MacOS or alt on Windows.

I didn't have the time to optimize Yōzefu on Windows. It looks terrible and the rendering is pretty bad.

TUI applications might not be fully accessible.

Ratatui supports mouse events but I didn't use them. When you enable this feature you can't select and copy/pasta text from the terminal.

Yōzefu is a boring tool with the same features as alternative tools. But I believe it offers a better user experience:

You control everything with your fingies, no need to use a mouse.

I have developed a query language to search for records in Kafka. It's inspired by SQL and it offers more possibilities to filter kafka records.

Yōzefu has a nice feedback loop thanks to Rust, Ratatui and the terminal.

It has a

--headlessmode so you can use the tool in your shell scripts.If you think the query language is missing features, you can create a search filter. It relies on WebAssembly and gives you the possibility to extend the query language with user-defined filtering functions.

There are other cool features I haven't talk about it, so give it a try!

# 1. Download the tool,

# 2. Start a kafka cluster with docker,

# 3. Publish some records,

# 4. And execute `yozf --cluster localhost`

curl -L "https://raw.githubusercontent.com/MAIF/yozefu/refs/heads/main/docs/try-it.sh" | bash

An interactive terminal user interface (TUI) application for exploring data of a kafka cluster.